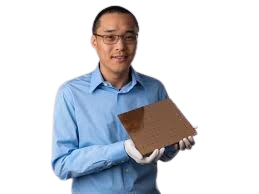

About Cerebras

About us

Cerebras is a leading company in AI and high-performance computing, known for its Wafer Scale Engine (WSE), the largest computer chip ever created. The WSE is designed to handle large AI and machine learning workloads efficiently. Cerebras technology is applied in areas such as healthcare, scientific research, and large-scale data processing, supporting complex computational tasks. Their innovations continue to advance the capabilities of AI hardware.

Waffle Scale Engine

Cerebras Systems is a company recognized for its innovations in artificial intelligence (AI) and high-performance computing (HPC). The company developed the Wafer Scale Engine (WSE), the largest computer chip ever built. Key microchips include:

Wafer Scale Engine (WSE):

-

WSE-1: Introduced in 2019, featuring 1.2 trillion transistors, 400,000 AI-optimized cores, and 18 GB of on-chip memory. Manufactured using a 16nm process.

-

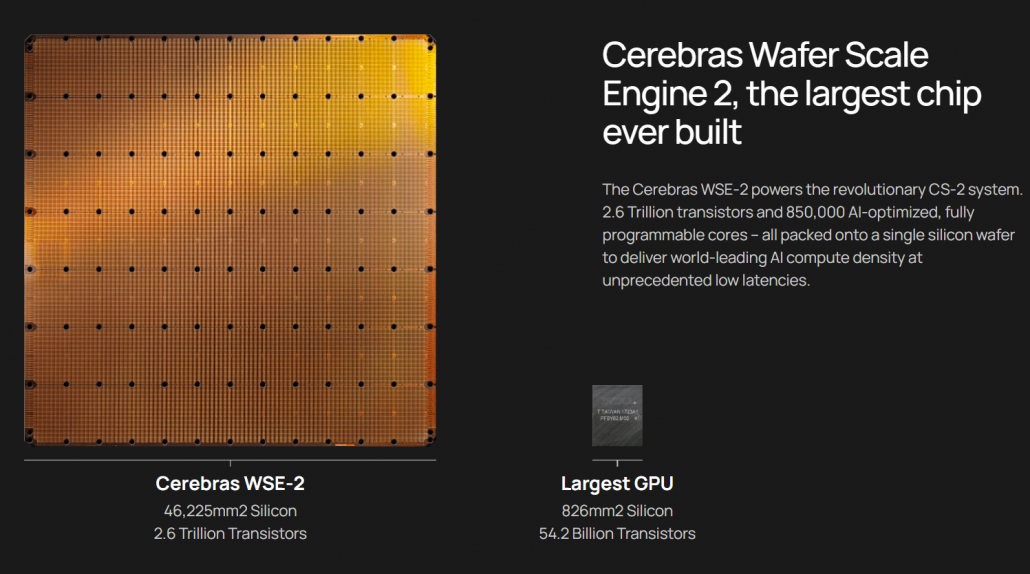

WSE-2: Released in 2021, with 2.6 trillion transistors, 850,000 AI-optimized cores, and 40 GB of on-chip memory. Manufactured using a 7nm process.

Cerebras and AI

1. Wafer Scale Engine (WSE)

Cerebras has developed the Wafer Scale Engine (WSE), the largest computer chip ever built, designed to efficiently handle large AI and machine learning (ML) workloads. The WSE-2 features 2.6 trillion transistors, 850,000 AI-optimized cores, and 40 GB of on-chip memory, providing high-performance computing for AI applications.

2. Accelerating AI Training

Training AI models, especially deep learning models, requires significant computational power. Cerebras’ WSE chips are engineered to reduce the time and resources needed for training complex models.

3. Scalability for Large Models

As AI models grow in size, traditional hardware can become a bottleneck. Cerebras technology is designed to scale efficiently, supporting the training and deployment of large-scale AI models such as GPT-3, GPT-4, and beyond.

To learn more about investing in Cerebras AI please fill in the form below!

Waffle Scale Engine

The Wafer Scale Engine (WSE) is an advanced technology designed to optimize data processing in AI systems. Its unique, grid-like structure efficiently distributes computational tasks across multiple nodes, enabling faster and more scalable performance. This technology is particularly useful for handling large datasets and complex algorithms, supporting applications in industries such as finance, healthcare, and autonomous systems. Its adaptable design allows developers to integrate it into existing workflows, improving efficiency and reducing processing time.

Key Features of the Wafer Scale Engine (WSE)

Size and Scale

The WSE is the largest computer chip ever built, measuring 46,225 square millimeters (about the size of a dinner plate). It is fabricated on a single silicon wafer, unlike traditional chips that are divided into smaller dies.

Transistors and Cores

The WSE-2 (second generation) contains 2.6 trillion transistors and 850,000 AI-optimized cores, designed for parallel processing to handle AI and machine learning workloads efficiently.

Memory

The WSE has 40 GB of on-chip SRAM, enabling ultra-fast data access. The large memory reduces reliance on external memory, minimizing latency and improving performance.

Bandwidth

The chip provides 20 petabits per second of fabric bandwidth, allowing seamless communication between cores — crucial for large-scale AI models.

Energy Efficiency

The WSE is built for energy efficiency, using less power than traditional GPUs and CPUs for comparable workloads.

Applications of the WSE

AI and Machine Learning

The WSE accelerates training and inference of deep learning models, including natural language processing (NLP), computer vision, and generative AI applications.

Scientific Research

It supports scientific simulations such as climate modeling, drug discovery, and molecular dynamics.

High-Performance Computing (HPC)

The WSE is ideal for HPC tasks that require massive computational power, including fluid dynamics and astrophysics.